Vignesh Kothapalli

Hi ![]() , I’m a Senior ML Engineer in the Foundation Models and Data team at LinkedIn AI. My work centers on post-training techniques for enhancing multi-task learning in large language models. I earned my MSc in Computer Science at NYU Courant, where I worked with Prof. Joan Bruna on the Neural Collapse phenomenon. I have also contributed to TensorFlow and served as a maintainer for TensorFlow-IO during my time at IBM. I hold a B.Tech in Electronics and Communication Engineering from IIT Guwahati.

, I’m a Senior ML Engineer in the Foundation Models and Data team at LinkedIn AI. My work centers on post-training techniques for enhancing multi-task learning in large language models. I earned my MSc in Computer Science at NYU Courant, where I worked with Prof. Joan Bruna on the Neural Collapse phenomenon. I have also contributed to TensorFlow and served as a maintainer for TensorFlow-IO during my time at IBM. I hold a B.Tech in Electronics and Communication Engineering from IIT Guwahati.

Research

I’m primarily interested in understanding the computational aspects of learning in neural networks, and using these insights to develop novel modeling techniques.

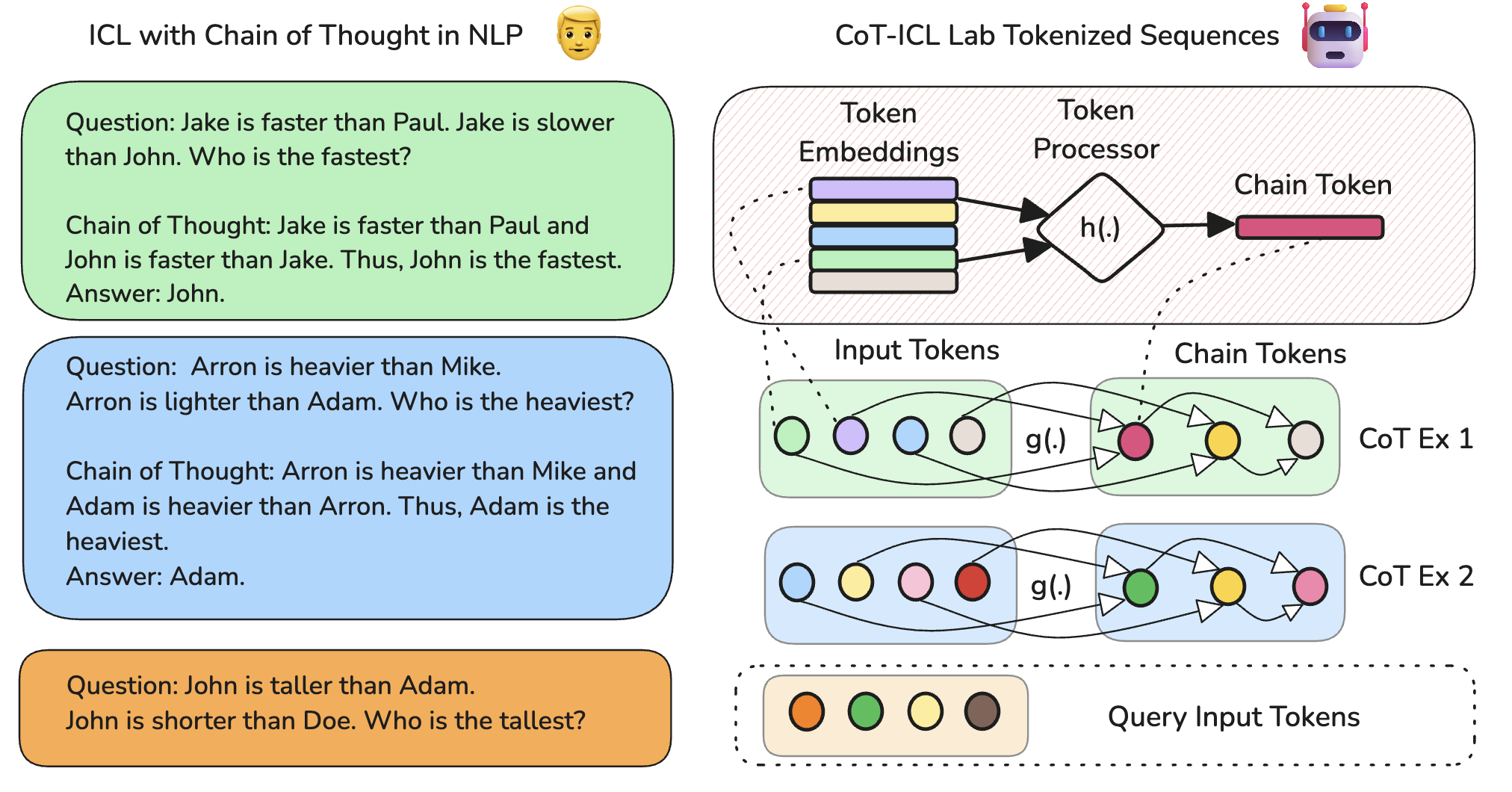

- Foundation Models: Chain-of-Thought reasoning, In-Context Learning and Multi-Task Learning in Transformer based LLMs.

- Learning Dynamics: Understanding feature evolution, weight matrix spectra and generalization in neural networks.

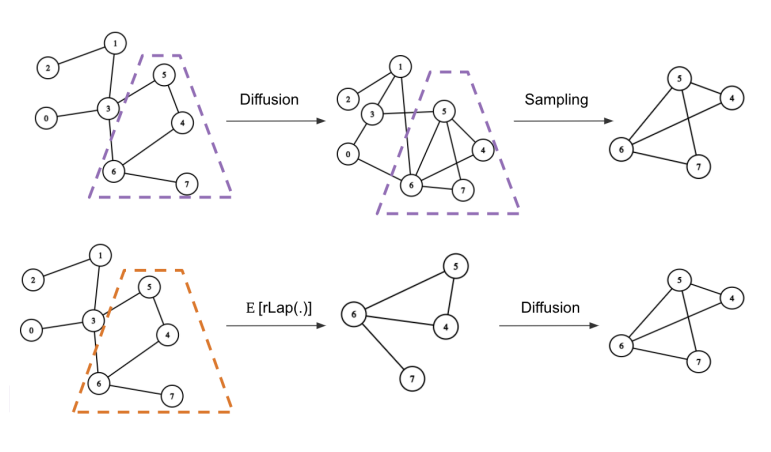

- Efficient Training/Inference: Developing low-level (randomized) algorithms for training and inference of large scale LLMs/GNNs.

Whats New

- [2025.06]

Our “Liger Kernel” paper has been accepted to CODE ML Workshop, ICML 2025!

Our “Liger Kernel” paper has been accepted to CODE ML Workshop, ICML 2025! - [2025.05]

The “CoT-ICL Lab” paper has been accepted to ACL Main 2025!

The “CoT-ICL Lab” paper has been accepted to ACL Main 2025! - [2025.04]

“Can Kernel Methods Explain How the Data Affects Neural Collapse?” has been accepted to TMLR!

“Can Kernel Methods Explain How the Data Affects Neural Collapse?” has been accepted to TMLR! - [2025.02]

Our tech-report on training and deploying production-grade LLMs at LinkedIn is available on arxiv!

Our tech-report on training and deploying production-grade LLMs at LinkedIn is available on arxiv! - [2025.01]

The 360Brew foundation model tech-report is available on arxiv!

The 360Brew foundation model tech-report is available on arxiv! - [2024.10]

Our tech-report on Liger-Kernels is now available on arxiv. (GPU Mode/Lightning AI/AMD+Embedded LLM/Blog)

Our tech-report on Liger-Kernels is now available on arxiv. (GPU Mode/Lightning AI/AMD+Embedded LLM/Blog)

Academic Service

- Conference Reviewer: NeurIPS 2024, ICML 2025

- Journal Reviewer: : IEEE Transactions on Cybernetics, IEEE Access, IEEE Transactions on Industrial Informatics, TMLR

Selected Publications

See full list at Google Scholar.- TMLR

Neural Collapse: A Review on Modelling Principles and GeneralizationTransactions on Machine Learning Research, 2023

Neural Collapse: A Review on Modelling Principles and GeneralizationTransactions on Machine Learning Research, 2023